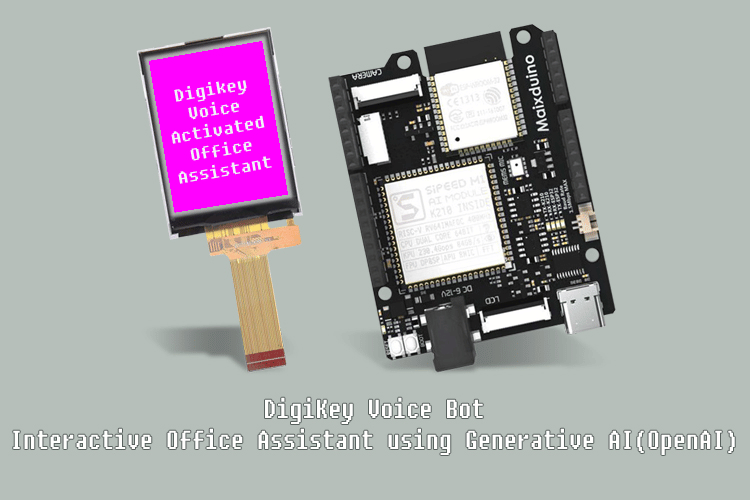

Hello everyone! In this project, we will create a voice-activated office assistant using the Maixduino board and generative AI technology.

The basic idea is simple: when the user asks a question using the Maixduino board's microphone, the device will capture the question, send it to a knowledge base (using basic data from the DigiKey website), and display the answer on the Maixduino screen.

The process is straightforward, and there are no complex circuits involved. I’ll explain everything step by step in an easy-to-understand way. Let’s get started!

First, let me explain the technologies used in this project. I have utilized two main technologies: IoT and Generative AI. Here are the components required for each:

Components Required for IoT

TTF Display

Power Bank

Components Required for Generative AI

Langchain

ChromaDB

OpenAI Key

Swagger API

Amazon EC2

Navigate to this YouTube Video for Full Demonstration of the Project

Collecting Data from the DigiKey Website

Let's start with the Generative AI process, as this is a one-time setup. First, we need to gather DigiKey URLs that provide basic information. Below are some sample URLs I used for this project. While we can add as many URLs as we want, I've included just a few for demonstration purposes.

You can find the list of URLs I used here: digikey-assistant/urls.

Creating a Knowledge Base for DigiKey Data

The next step is to create the knowledge base using the content from the URLs we gathered. We will use the script located at digikey-assistant/db.py in the repository karthick965938/digikey-assistant.

The knowledge base acts like a database that stores the information. This script will create a ChromaDB using data from the DigiKey website, and it will be executed using the Langchain and OpenAI packages.

Preparing the LangChain QA Chain

First, let me explain what LangChain and a QA chain are. LangChain is a framework designed for building applications that utilize large language models (LLMs). It provides tools and components that simplify the integration of language models with various data sources, making it easier to develop chatbots, question-answering systems, and other NLP-driven applications.

A QA chain in LangChain refers to a series of components that facilitate question-answering tasks. This typically involves:

Retrieval: Finding relevant information or documents based on a user's query.

Processing: Using an LLM to interpret the question and generate an answer.

Output: Delivering the final response to the user.

Overall, LangChain and its QA chain capabilities enable developers to efficiently build and deploy sophisticated language model applications.

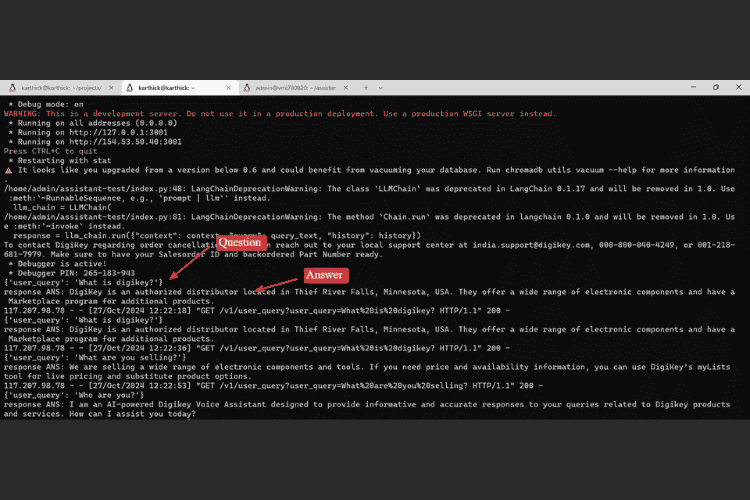

Using this framework, I created a QA chain in the script located at digikey-assistant/index.py in the repository karthick965938/digikey-assistant. When a question is asked, the system prepares the answer. Here are some examples:

question = "Who are you?"

answer = generate_answer(question)

“I am an AI-powered Digikey Voice Assistant designed to provide informative and accurate responses to your queries related to Digikey products and services.”

question = "What is the Digikey?"

answer = generate_answer(question)

“DigiKey is an authorized distributor with headquarters in Thief River Falls, Minnesota, USA. They also have regional support centers in various countries.”

question = "How can I contact them?"

answer = generate_answer(question)

“To contact DigiKey regarding order cancellations, you can reach out to your local support center at [email protected] or call 000-800-040-4249 or 001-218-681-7979.”

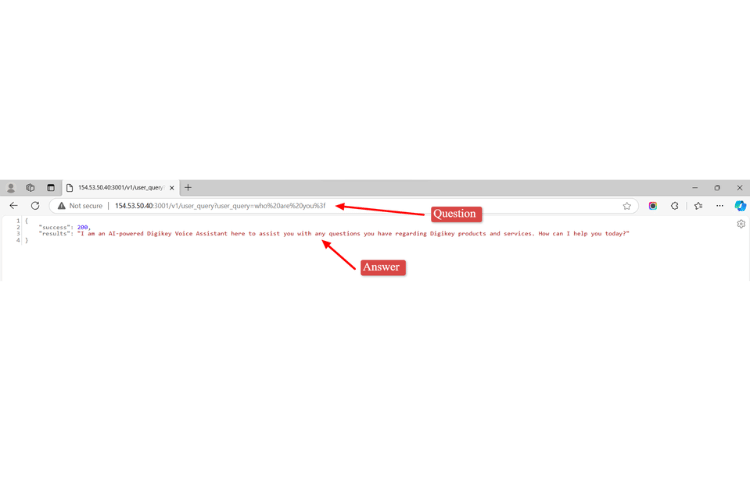

Yes, everything is working smoothly! I have also prepared a Swagger API for the functions mentioned above. This API will be very helpful for making API calls from the Maixduino board. You can find the exact code in the script located at digikey-assistant/final_api.py in the repository karthick965938/digikey-assistant.

The next step is to deploy it on an AWS EC2 server to enable communication with the Maixduino board over Wi-Fi. I have deployed my code at http://154.53.50.40:3001. The reason for deploying the Swagger API on the EC2 server is to allow API calls from anywhere, providing a smooth connection for the Maixduino board.

Communicating with the Maixduino Board

Now that we have everything set up for the Generative AI side, it's time to prepare the code for the IoT part. This will involve three main components:

Speech Recognition: To allow the user to ask questions.

Wi-Fi: To make API calls.

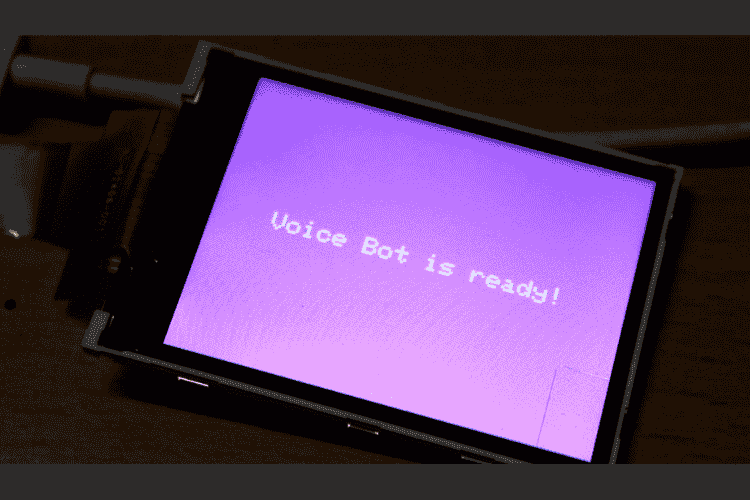

Sipeed ST7789 LCD: To display the response (the answer).

The idea is to train the system with some basic questions about DigiKey as voice commands. This will serve as a starting point. When the user asks a question, the system will send an API request using the Wi-Fi connection. It will then receive the response and display it on the LCD screen.

Based on these components, I developed the C++ script located at digikey-assistant/maixduino/main.cpp in the repository karthick965938/digikey-assistant.

Here is the Final output for this project :)

.png)

.png)

.png)

.png)

Future Enhancements

Looking ahead, there are several exciting enhancements we could implement to improve the functionality of our voice-activated office assistant:

Audio File Storage: Instead of using real-time speech recognition, we can capture user questions via the microphone and store them as audio files on an SD card. This would allow for better handling of queries and the potential to review previous questions.

Audio-Based API Interaction: Once the audio files are stored, we can send these files to the API for processing. This would enable the assistant to work with audio inputs more flexibly.

Text-to-Speech Responses: To enhance user interaction, we can implement a text-to-speech feature that converts the API's textual responses into audio format. This way, users can receive answers in a spoken format.

Speaker Integration: By integrating a speaker with the Maixduino board, users can listen to the responses directly, making the interaction more engaging and accessible.

These enhancements would not only improve user experience but also expand the capabilities of the assistant, making it more versatile in various office settings.

Conclusion

In this project, we successfully developed a voice-activated office assistant that integrates IoT and Generative AI technologies using the Maixduino board. By leveraging speech recognition, Wi-Fi connectivity, and a user-friendly LCD display, we created a system that allows users to ask questions and receive instant answers based on DigiKey's extensive data.

This project not only demonstrates the potential of combining AI with IoT but also showcases the ease of building smart applications with accessible tools and frameworks. Moving forward, there are opportunities to expand this system by adding more complex queries, enhancing the user interface, and integrating additional data sources.

Overall, this voice-activated assistant represents a significant step towards creating intelligent office environments that can simplify workflows and improve productivity.

Click on the GitHub Image to view or download code